|

I'm a research scientist at Google DeepMind in San Francisco. Before that I got my Ph.D. in computer science from the Harvard School of Engineering and Applied Sciences, where I was advised by Todd Zickler. Previously, I received a double B.Sc. in physics and in electrical engineering from Tel Aviv University, after which I spent a couple of years as a researcher at Camerai (acquired by Apple), working on real-time computer vision algorithms for mobile devices. Email / Google Scholar / Twitter / Github |

|

|

I am interested in computer vision and graphics. More specifically, I enjoy working on methods that use domain-specific knowledge of geometry, graphics, and physics, to improve computer vision systems. |

|

Jiapeng Tang*, Matthew J. Levine*, Dor Verbin, Stephan J. Garbin, Matthias Nießner, Ricardo Martin Brualla, Pratul Srinivasan, Philipp Henzler NeurIPS, 2025 (Spotlight) project page / arXiv We use a multi-view relighting model to build a lighting-conditioned NeRF that generalizes to unseen lighting and doesn't require per-lighting optimization |

|

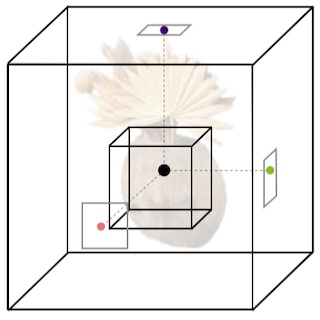

Alexander Mai, Peter Hedman, George Kopanas, Dor Verbin, David Futschik, Qiangeng Xu, Falko Kuester, Jonathan T. Barron, Yinda Zhang ICCV, 2025 (Oral Presentation) project page / code / arXiv We raytrace constant-density ellipsoids, which results in a more accurate and flexible model compared with Gaussian splatting, while still running in real-time. |

|

Hadi Alzayer, Philipp Henzler, Jonathan T. Barron, Jia-Bin Huang, Pratul Srinivasan, Dor Verbin CVPR, 2025 (Highlight) project page / arXiv We train a generative model to turn images taken under extremely different illumination into a consistently-lit set of images, which can then be 3D reconstructed. |

|

Alex Trevithick, Roni Paiss, Philipp Henzler, Dor Verbin, Rundi Wu, Hadi Alzayer, Ruiqi Gao, Ben Poole, Jonathan T. Barron, Aleksander Holynski, Ravi Ramamoorthi, Pratul Srinivasan CVPR, 2025 project page / arXiv We use a video model to simulate inconsistencies during capture in order to learn how to get consistent 3D reconstructions from inconsistent captures. |

|

Dor Verbin, Pratul Srinivasan, Peter Hedman, Benjamin Attal, Ben Mildenhall, Richard Szeliski, Jonathan T. Barron SIGGRAPH Asia, 2024 project page / arXiv Casting reflection cones inside NeRF lets us synthesize photorealistic specularities in real-world scenes. |

|

Xiaoming Zhao, Pratul Srinivasan, Dor Verbin, Keunhong Park, Ricardo Martin Brualla, Philipp Henzler NeurIPS, 2024 project page / arXiv We do 3D relighting by sampling from a single-image relighting diffusion model, and distilling it into a latent-variable NeRF. |

|

Benjamin Attal, Dor Verbin, Ben Mildenhall, Peter Hedman, Jonathan T. Barron, Matthew O'Toole, Pratul Srinivasan ECCV, 2024 (Oral Presentation) project page / arXiv Using radiance caches, importance sampling, and control variates helps reduce bias in inverse rendering, resulting in better estimates of geometry, materials, and lighting. |

|

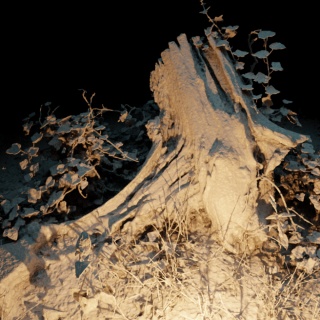

Dor Verbin, Ben Mildenhall, Peter Hedman, Jonathan T. Barron, Todd Zickler, Pratul Srinivasan CVPR, 2024 (Oral Presentation) project page / video / arXiv Shadows cast by unobserved occluders provide a cue for recovering materials and high-frequency illumination from images of diffuse objects. |

|

Rundi Wu*, Ben Mildenhall*, Philipp Henzler, Keunhong Park, Ruiqi Gao, Daniel Watson, Pratul Srinivasan, Dor Verbin, Jonathan T. Barron, Ben Poole, Aleksander Holynski* CVPR, 2024 project page / arXiv We finetune an image diffusion model to accept multiview inputs, then use it to regularize radiance field reconstruction. |

|

Xiaojuan Wang, Janne Kontkanen, Brian Curless, Steve Seitz, Ira Kemelmacher, Ben Mildenhall, Pratul Srinivasan, Dor Verbin, Aleksander Holynski CVPR, 2024 (Highlight) project page / arXiv We use a generative text-to-image model for creating videos with extreme zoom ins. |

|

Christian Reiser, Stephan J. Garbin, Pratul Srinivasan, Dor Verbin, Richard Szeliski, Ben Mildenhall, Jonathan T. Barron, Peter Hedman*, Andreas Geiger* SIGGRAPH, 2024 project page / video / arXiv Applying anti-aliasing to a discrete opacity grid lets you render a hard representation into a soft image, and this enables highly-detailed mesh recovery. |

|

Mia G. Polansky, Charles Herrmann, Junhwa Hur, Deqing Sun, Dor Verbin, Todd Zickler, CoRR, 2024 project page / arXiv We design a new boundary-aware attention mechanism to quickly find boundaries at images with low SNRs. The output is similar to the field of junctions, but this works ~100x faster! |

|

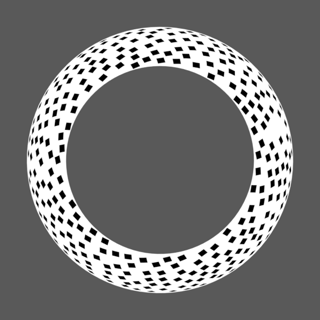

Pratul Srinivasan, Stephan J. Garbin, Dor Verbin, Jonathan T. Barron, Ben Mildenhall ECCV, 2024 project page / video / arXiv We use neural fields to recover editable UV mappings for challenging geometry (e.g. volumetric representations like NeRF or DreamFusion, or meshes extracted from them). |

|

Jonathan T. Barron, Ben Mildenhall, Dor Verbin, Pratul Srinivasan, Peter Hedman ICCV, 2023 (Oral Presentation, Best Paper Finalist) project page / video / arXiv We combine mip-NeRF 360 and Instant NGP to reconstruct very large scenes. |

|

Alexander Mai, Dor Verbin, Falko Kuester, Sara Fridovich-Keil ICCV, 2023 project page / video / code / arXiv We treat each point in space as an infinitesimal volumetric surface element. Using MC-based rendering to fit this representation to a collection of images yields accurate geometry, materials, and illumination. |

|

Lior Yariv*, Peter Hedman*, Christian Reiser, Dor Verbin, Pratul Srinivasan, Richard Szeliski, Jonathan T. Barron, Ben Mildenhall SIGGRAPH, 2023 project page / video / arXiv We achieve real-time view synthesis by baking a high quality mesh and fine-tuning a lightweight appearance model on top. |

|

Christian Reiser, Richard Szeliski, Dor Verbin, Pratul Srinivasan, Ben Mildenhall, Andreas Geiger, Jonathan T. Barron, Peter Hedman SIGGRAPH, 2023 project page / video / arXiv We achieve real-time view synthesis using a volumetric rendering model with a compact representation combining a low resolution 3D feature grid and high resolution 2D feature planes. |

|

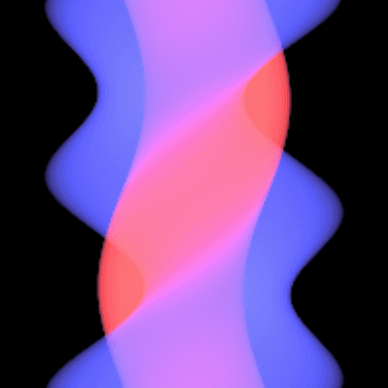

Dor Verbin, Peter Hedman, Ben Mildenhall, Todd Zickler, Jonathan T. Barron, Pratul Srinivasan CVPR, 2022 (Oral Presentation, Best Student Paper Honorable Mention) project page / arXiv / code / video We modify NeRF's representation of view-dependent appearance to improve its representation of specular appearance, and recover accurate surface normals. Our method also enables view-consistent scene editing. |

|

Jonathan T. Barron, Ben Mildenhall, Dor Verbin, Pratul Srinivasan, Peter Hedman CVPR, 2022 (Oral Presentation) project page / arXiv / code / video We extend mip-NeRF to produce realistic results on unbounded scenes. |

|

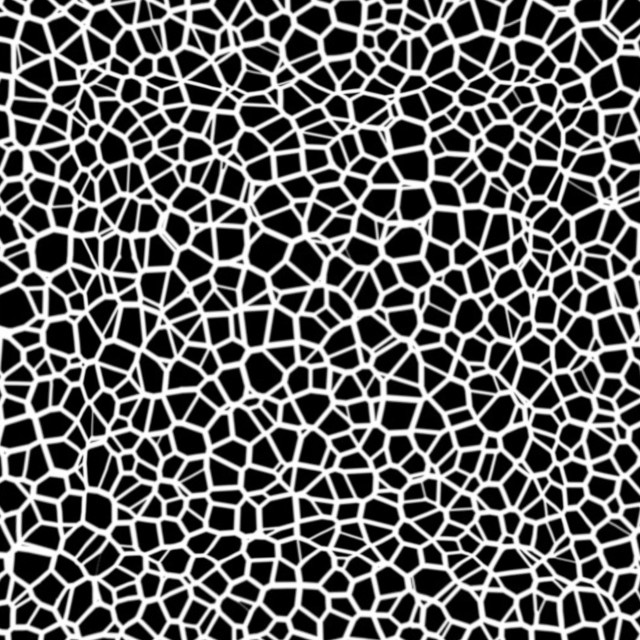

Dor Verbin, Todd Zickler ICCV, 2021 project page / arXiv / code / video By modeling each patch in an image as a generalized junction, our model uses concurrencies between different boundary elements such as junctions, corners, and edges, and manages to extract boundary structure from extremely noisy images where previous methods fail. |

|

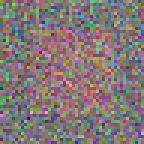

Dor Verbin, Steven J. Gortler, Todd Zickler TPAMI, 2021 arXiv / paper / video (combined with SfT) We present a simple condition for the uniqueness of a solution to the shape from texture problem. We show that in the general case four views of a cyclostationary texture satisfy this condition and are therefore sufficient to uniquely determine shape. |

|

Dor Verbin, Todd Zickler CVPR, 2020 project page / paper / supplement / code and data / video We formulate the shape from texture problem as a 3-player game. This game simultaneously estimates the underlying flat texture and object shape, and it succeeds for a large variety of texture types. |

|

I'm also using Jon's website template. |